restore using restic as plugin failed on the following setup :

cloud33 BM ( 12 workers )

restic pods have 16GB/32GB memory and cpu 2/1

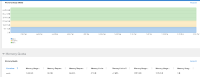

from looking on the grafane on the restic pod during the restore process

we didn't hit any memory limitation

the restore failed after around 60 min

from the restore log :

[root@f07-h28-000-r640 tzahi]# velero restore logs perf-2.1.0-nfs-restic-32gb --insecure-skip-tls-verify |grep -i error time="2022-07-26T11:31:12Z" level=error msg="unable to successfully complete restic restores of pod's volumes" error="timed out waiting for all PodVolumeRestores to complete" logSource="pkg/restore/restore.go:1560" restore=openshift-adp/perf-2.1.0-nfs-restic-32gb time="2022-07-26T11:31:12Z" level=error msg="Velero restore error: timed out waiting for all PodVolumeRestores to complete" logSource="pkg/controller/restore_controller.go:497" restore=openshift-adp/perf-2.1.0-nfs-restic-32gb

Name: perf-2.1.0-nfs-restic-32gb Namespace: openshift-adp Labels: <none> Annotations: <none>Phase: PartiallyFailed (run 'velero restore logs perf-2.1.0-nfs-restic-32gb' for more information) Total items to be restored: 29 Items restored: 29Started: 2022-07-26 10:31:11 +0000 UTC Completed: 2022-07-26 11:31:12 +0000 UTCWarnings: Velero: <none> Cluster: could not restore, CustomResourceDefinition "clusterserviceversions.operators.coreos.com" already exists. Warning: the in-cluster version is different than the backed-up version. Namespaces: perf-datagen-case1-nfs: could not restore, RoleBinding "system:deployers" already exists. Warning: the in-cluster version is different than the backed-up version. could not restore, RoleBinding "system:image-builders" already exists. Warning: the in-cluster version is different than the backed-up version. could not restore, RoleBinding "system:image-pullers" already exists. Warning: the in-cluster version is different than the backed-up version.Errors: Velero: timed out waiting for all PodVolumeRestores to complete Cluster: <none> Namespaces: <none>Backup: perf-2.1.0-nfs-restic-32gbNamespaces: Included: all namespaces found in the backup Excluded: <none>Resources: Included: * Excluded: nodes, events, events.events.k8s.io, backups.velero.io, restores.velero.io, resticrepositories.velero.io Cluster-scoped: autoNamespace mappings: <none>Label selector: <none>Restore PVs: autoRestic Restores: New: perf-datagen-case1-nfs/perf-datagen-case1-8495854c4d-gmkq4: vol-0Existing Resource Policy: <none>Preserve Service NodePorts: auto

restic pods cpu/memory limitation :

configuration:

restic:

enable: true

podConfig:

resourceAllocations:

limits:

cpu: 2

memory: 32768Mi

requests:

cpu: 1

memory: 16384Mi

versions :

OCP : 4.10.21

OADP : 1.1.0 iib:279750